Social media giants will have to explain what they are doing to protect Australians from violent extremists and terrorists as the country's online safety regulator puts them on notice.

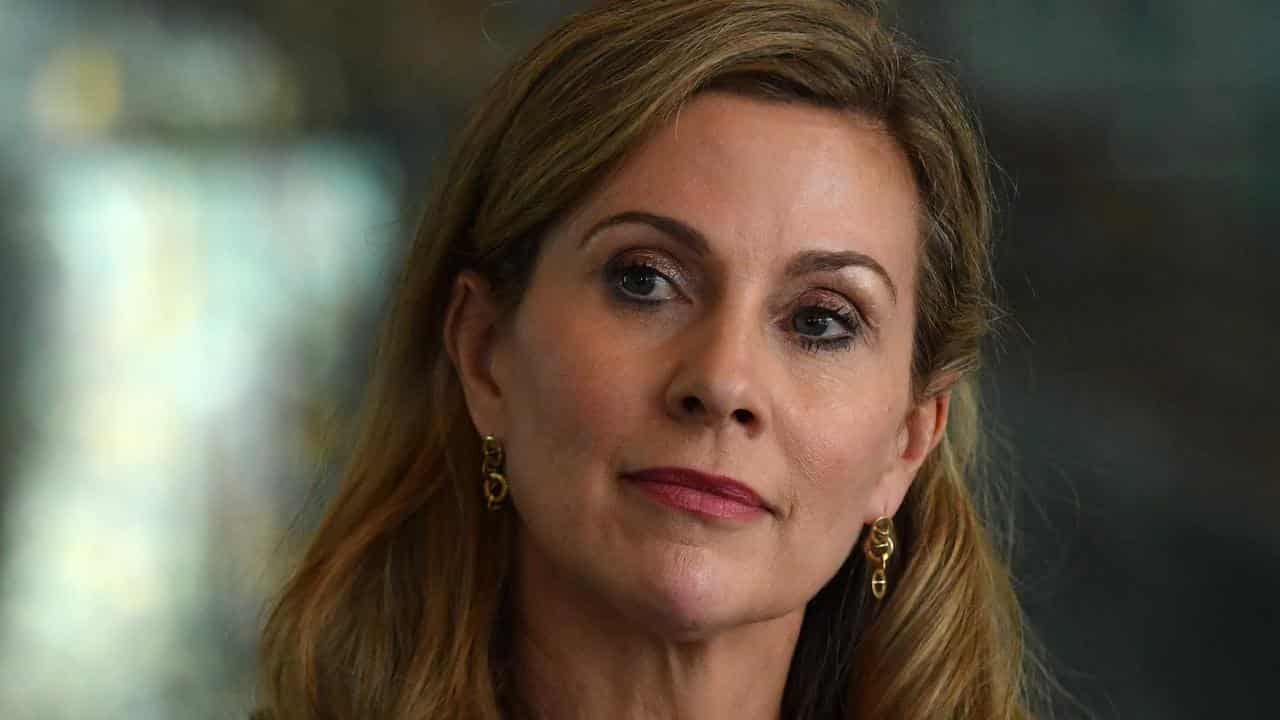

The eSafety Commissioner announced on Tuesday she issued legal notices to Meta, X, Google, WhatsApp, Telegram and Reddit, demanding each of the companies report on steps they were taking to protect Australians from the threatening content online.

Violent and extremist content on social media has been blamed for online radicalisation, which has led up to terror attacks including the 2019 Christchurch mosques shootings, which killed 51 people.

The same year, a gunman killed two people when he opened fire outside a synagogue in the eastern German city of Halle on the Jewish holy day of Yom Kippur, and live-streamed part of his rampage.

In 2022, a gunman murdered 10 black Americans at Buffalo in New York and also livestreamed part of it online, with Reddit content cited as playing a part in his radicalisation.

The shooting was one of the deadliest targeted attacks on black people by a lone white gunman in US history.

The social media companies would have to answer a series of detailed questions about how they were tackling online terrorist and extremist material under eSafety Commissioner Julie Inman Grant's directive.

The commissioner was still fielding reports about terror content being re-shared online, she said.

“We remain concerned about how extremists weaponise technology like live-streaming, algorithms and recommender systems and other features to promote or share this hugely harmful material,” Ms Inman Grant said.

“We are also concerned by reports that terrorists and violent extremists are moving to capitalise on the emergence of generative AI and are experimenting with ways this new technology can be misused to cause harm. "

Fresh alarm bells were raised when United Nations-funded Tech Against Terrorism reported an Islamic State forum was looking into the benefits of different AI platforms ChatGPT, Google Gemini and Microsoft Copilot, the commissioner said.

Technology companies have a responsibility to ensure their services were not exploited, Ms Inman Grant said.

“It’s no coincidence we have chosen these companies to send notices to as there is evidence that their services are exploited by terrorists and violent extremists," she said.

"Disappointingly, none of these companies have chosen to provide this information through the existing voluntary framework – developed in conjunction with industry – provided by the OECD."

Telegram was recently ranked as containing the most terror and extremist content, while YouTube was second, X was third, and Facebook and Instagram were fourth and fifth, respectively, in an OECD report.

Opposition communications spokesman David Coleman welcomed the eSafety Commissioner's announcement, saying self-regulation clearly did not work.

"This kind of content is completely abhorrent and the fight against it must continue to be a top priority," Mr Coleman said.

"The big digital platforms must absolutely be held accountable for the content they publish and profit from."

The six social media companies have 49 days to respond to the eSafety commissioner's demands.

The eSafety Commissioner also planned to ask Telegram and Reddit about what they were doing to remove child sexual exploitation and abuse material from their platforms.

Lifeline 13 11 14

beyondblue 1300 22 4636

1800 RESPECT (1800 737 732)

National Sexual Abuse and Redress Support Service 1800 211 028